What if you have an Azure IoT solution and recently added Azure Data Explorer (ADX), and you also want to ingest your historical data (stored in a Storage Account). Or another service, for example an Azure function, stores its data into a Storage Account which you want to ingest into Azure Data Explorer.

There are several options to do so, for instance by programmatically ingest the data into Azure Data Explorer (a blog by Sander van de Velde) or by a function app which ingest data into Azure Data Explorer using an Eventhub. But in this article I will use the default functionality of Azure Data Explorer using LightIngest.

Prepare a Storage Account

Before we can ingest data into Azure Data Explorer, we will need a Storage Account with several files. Luckily, I have an IoT Hub which already sends its data to a Storage Account:

As a check, I also checked whether the Storage Account contains files:

Now that we have a base, let’s start to ingest.

Azure Data Explorer ingest

I wanted to use LightIngest to ingest the data into Azure Data Explorer. So first thing to do is to install LightIngest. It can be downloaded as part of the Microsoft.Azure.Kusto.Tools NuGet package. For installation instructions, see Install LightIngest.

It is possible to use LightIngest within a command-line interface. But there is an easier way to ingest the data into Azure Data Explorer. First, open Azure Data Explorer, navigate to “Data” (no. 1 in the screenshot below) and then select “Ingest data” (no. 2).

Destination

A wizard will open that will walk us through the process. Starting by selecting the cluster, database and table:

Source

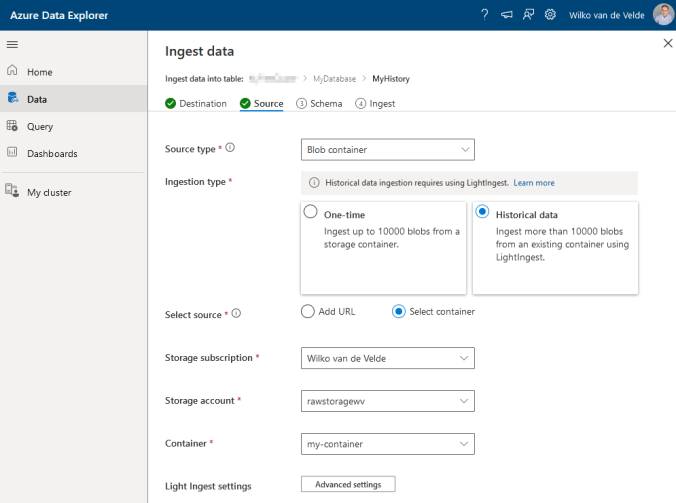

Next, we can select the source type, in our case this is a Blob container. It would be possible to use ingestion type “One-time” when using a storage account with only four files. But I want to use LightIngest, so I selected “Historical data” and after that I selected my Storage Account.

It is possible to filter the files which would be ingested (see the arrows in the screenshot below). In our case, we don’t do this, because we only have four files.

At the bottom of the screen, the files are being displayed which we are going to ingest. Select one of the files for schema defining.

Schema

The selected file will be used in this step to define the schema of the table. Azure Data Explorer already recognized that the file is a JSON file and that it is uncompressed. But the telemetry of the IoT message is compressed into one column:

Therefor, I changed “Nested levels” to two, so Azure Data Explorer splits that one column into several columns. If your IoT message contains a hierarchy, you may need to raise the “Nested levels” even more.

The next step is to delete the columns which are not needed. This can be done by selecting the drop-down menu of each column:

In the same menu you can change the column name, data type and mapping. It is important to spend time on this so that the data in the table is properly stored. In my situation, this results as follows:

Ingest

The last step will prepare some tasks for the ingestion, like creating the table and table mapping. It will also generate a command for LightIngest. Next, copy the command, open the command prompt in the folder where you installed LightIngest, and paste the command.

Note: You may receive an "Unauthorized" error. In that case, define tenants permissions, see this Microsoft article.

After a couple of minutes (depending on how much you will ingest) the data will be ingested. In my situation, the progress-bar wasn’t working, as seen in the image below. It stayed on 0 of 4 items completed till the last line. This was very inconvenient because at first I thought it didn’t work.

So, did it work?

To check if the ingestion worked, open Azure Data Explorer and query the created table:

And it looks like the data has been imported.

Point of interest

One thing I noticed is that the imported files stay in the blob container. So what happens when the ingestion fails at 50%, and I need to run the LightIngest command again? The records will be ingested again, and the telemetry would be duplicated in the database.

A nice addition to the tool would be that the files can be moved to another container when ingested.

Conclusion

LightIngest is a lightweight tool for importing data into Azure Data Explorer. The wizard in Azure Data Explorer makes it very easy to use. It works good when ingesting historical data that should be imported once. But if you are looking for a solution that continuous ingest data, then LightIngest isn’t the tool for you because the files stay in the source container.

1 Pingback